Tencent Cloud launches Xingmai Network 2.0 to speed up large model training by another 20%

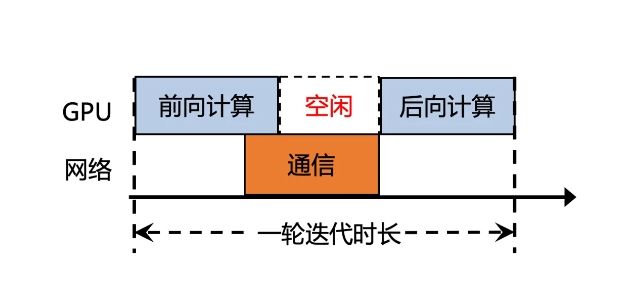

Tencent Cloud recently launched an upgraded version of Xingmai Network 2.0, aiming to improve the efficiency of large model training. In the previous version, the synchronization communication time of calculation results of large models accounted for more than 50%, resulting in low efficiency. The new version of Xingmai Network 2.0 has been upgraded in many aspects:

1. Supports 100,000-card networking in a single cluster, doubling the scale, increasing network communication efficiency by 60%, increasing large model training efficiency by 20%, and reducing fault location from days to minutes.

2. Self-developed switches, optical modules, network cards and other network equipment are upgraded to make the infrastructure more reliable and support a single cluster with a scale of more than 100,000 GPU cards.

3. The new communication protocol TiTa2.0 is deployed on the network card, and the congestion algorithm is upgraded to an active congestion control algorithm. Communication efficiency is increased by 30%, and large model training efficiency is increased by 10%.

4. The high-performance collective communication library TCCL2.0 uses NVLINK+NET heterogeneous parallel communication to realize parallel transmission of data. It also has Auto-Tune Network Expert adaptive algorithm, which improves communication performance by 30% and large model training efficiency by 10%.

5. The newly added Tencent exclusive technology Lingjing simulation platform can fully monitor the cluster network, accurately locate GPU node problems, and reduce the time to locate 10,000-ka-level training faults from days to minutes.

Through these upgrades, the communication efficiency of the Xingmai network has been increased by 60%, the large model training efficiency has been increased by 20%, and the fault location accuracy has also been improved. These improvements will help improve the efficiency and performance of large model training, allowing expensive GPU resources to be more fully utilized.

-

It's the spooky time of the year, so here are some of the best horror games you can play to give yourself a good scare.

It's the spooky time of the year, so here are some of the best horror games you can play to give yourself a good scare. -

Moon House Room Escape Walkthrough

Solve the Moon House apartment tour puzzle! -

How to Play Dice in Kingdom Come Deliverance 2: All Badges & Scoring Combos

If you're wondering how to play dice in Kingdom Come: Deliverance 2, here's what you need to know about that. -

How to make a car in Build a Car to Kill Zombies

Zombie Stampede!